Automating Database download and restore from Azure Blob Storage

At this time it appears that BB nightly backup delivery service does not allow for delivery to our BB FTP site, so FTP automation is off the table.

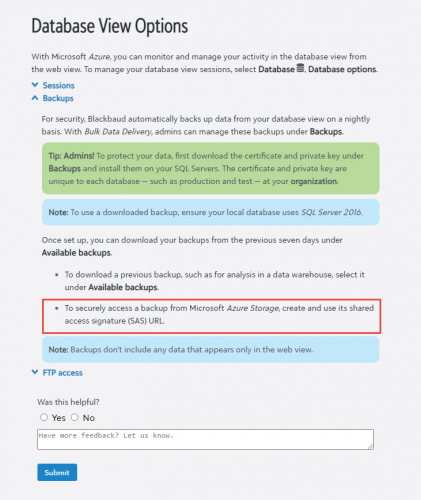

Instead the backups appear to only be available on Azure blob storage.

I've confirmed automation is possible using azcopy via a powershell type Job Agent in SQL Server. This is an automated transfer of files to local storage, with is then followed by a RESTORE db FROM DISK. Essentially a two step process - 1) copy to disk. 2) restore from disk.

However, the most direct solution I'm interested in would be a RESTORE db FROM URL using the SAS credential as a means of both accessing and downloading/restoring in one step.

Has anyone gotten this to work? I get a permissions error.

Below is the documentation that leads me to believe this should be possible.

What I've gotten so far from BB, is that the SAS is intended for manual use with Azure Storage Explorer. It does indeed work for that purpose, but it should also work equally well for an automated process.

I'm just missing some peace of the puzzle.

Thanks!

https://webfiles.blackbaud.com/files/support/helpfiles/rex/content/bb-database-view-options.html

Comments

-

Neal - sorry, this doesn't answer your questions! - but your post has intrigued me. I thought that to get a backup restored on cloud-hosted Azure, you were dependent on BB to do it for you, for a one-time fee. Am I understanding that a person can get "Bulk Data Delivery" added to their NXT subscription and gain access to it themselves?0

-

Whoa! I didn't even know this was an option.

I thought we were stuck with the old monthly "Contact the Help Desk, Request a Backup, Wait a Day or Two, Receive Notice that a Backup is in your FTP space, Manually Download, Restore in Your Own Environment" method.

This is kind of exciting. ?0 -

Hi Faith,

No, this is a service from Blackbaud to

deliver backups daily -- for restore to a local sql server, as

opposed to restoring either your cloud based production or sandbox

environment.Neal

ZandonellaVice President of

Data & Information ServicesMontana State

University Alumni Foundation406-994-4912

CONFIDENTIALITY: This email

(including any attachments) may contain confidential, proprietary

and privileged information, and unauthorized disclosure or use is

prohibited. If you received this email in error, please notify the

sender and delete this email from your system. Thank

you.0 -

No, sorry if I was unclear. I'm referring to the service from Blackbaud that delivers backups daily -- for restore to a local sql server, as opposed to being used to restoring your cloud based production or sandbox environment.

0 -

That is the concept. We contracted for nightly backups, however they don't appear to be available via FTP as assumed when we purchased the nightly backups. ? The backups are only available in Azure, which poses it's own challenges for automated retrieval. I'll post more, as I learn more from BB.0

-

Neal, clarifying that -- although this backup is not able to be "restored" to the cloud environment without BB assistance, it could still be opened locally and one could, hypothetically, export any data needed to import into the Cloud-hosted environment? I'm thinking about all the KB articles that advise "backing up before imports", and thinking that if an import error occurred, one could simply import the corrected data back into the system if they had access to the nightly backup files themselves. Am I on the right track here?

I've only ever had to restore our database once in 10 years, back when we sere self-hosted, but it creates a cautious habit. ?0 -

Hi Faith, yes the local db could be used to

generate import list for a variety of use cases, including to

correct a prior import mistake.Typically it would be used for reporting, or

to generate ad hoc lists that are too complicated for the RENXT

native list, query, or export tools.Neal

ZandonellaVice President of

Data & Information ServicesMontana State

University Alumni Foundation406-994-4912

Join the MSU

Alumni Association at msuaf.org/join.CONFIDENTIALITY: This email

(including any attachments) may contain confidential, proprietary

and privileged information, and unauthorized disclosure or use is

prohibited. If you received this email in error, please notify the

sender and delete this email from your system. Thank

you.1 -

Hi Neal, did you CREATE CREDENTIAL on your local SQL instance to access the file on Azure? Once that's in place you should be able to run the RESTORE db FROM URL = '' WITH CREDENTIAL = '' from what I can tell. There's some more info here: https://www.sqlshack.com/how-to-connect-and-perform-a-sql-server-database-restore-from-azure-blob-storage/.

Let me know how it goes.

Mike0 -

Yes, thanks for the suggestion.

After we created the credential, there is no

need to RESTORE Db FROM URL WITH CREDENTIAL. The WITH

CREDENTIAL statement is only explicitly used when you are not using

SAS, but are going directly to the blob storage with an explicit

secret key. BB uses SAS as a generic credential for all

customers, instead of having to provision an individual, secret key

per customer.We’ve discovered what the core issue is.

Unfortunately the RESTORE Db FROM URL can only be used with a block

file type. BB is storing the .bak files as page files.

We are now clear on the issue, and I doubt BB will be willing to

change the file types on their backups, but I’ve asked that they do

so, in order to facilitate the automation of restoring backups.Our current work around is to do the two-step,

using sql powershell AZCOPY, then restore from disk. Works

great, although it does not take advantage of SQL servers restore

from URL capabilities.Thanks again. I’ll post if we make any

further progress with BB.Neal

0 -

Hi Neal. Thanks for your post. I believe we can help you.

Mission BI Reporting Access™ and SQL Access™ are Blackbaud Marketplace Applications that enable standardized data source connections to your RE NXT™ and FE NXT™ data from virtually any reporting platform such as Microsoft Power BI, Tableau, or Crystal Reports.

Reporting Access™ and SQL Access™ are much more than simple API connectors. They are specialized database solutions, hosted and managed in the secure Mission BI Cloud, and optimized as data source connections for advanced custom reporting.

To be clear, our direct database access solution would eliminate the need for the daily backup and automate near real time refreshes in a secure SQL environment within the Mission BI warehouse.

Let's jump on a call to become acquainted and discuss how I can help. Please use the link below to drop a time on my calendar for us to connect.

View my schedule and book a Conversation with me HERE.Best,

JohnJohn Wooster

CRO | Mission BI, Inc.

843.491.6969 | https://www.missionbi.com 0

0 -

@Neal Zandonella

>>Our current work around is to do the two-step, using sql powershell AZCOPY, then restore from disk. Works great, although it does not take advantage of SQL servers restore from URL capabilities. @Neal - this would work for my organisation - a two step process is fine. Can you give me any pointers to how you have got AZCopy to work, I have tried this with all the advice I have found and can still not get the script to work - Mick0

Categories

- All Categories

- Shannon parent

- shannon 2

- shannon 1

- 21 Advocacy DC Users Group

- 14 BBCRM PAG Discussions

- 89 High Education Program Advisory Group (HE PAG)

- 28 Luminate CRM DC Users Group

- 8 DC Luminate CRM Users Group

- Luminate PAG

- 5.9K Blackbaud Altru®

- 58 Blackbaud Award Management™ and Blackbaud Stewardship Management™

- 409 bbcon®

- 2.1K Blackbaud CRM™ and Blackbaud Internet Solutions™

- donorCentrics®

- 1.1K Blackbaud eTapestry®

- 2.8K Blackbaud Financial Edge NXT®

- 1.1K Blackbaud Grantmaking™

- 527 Education Management Solutions for Higher Education

- 1 JustGiving® from Blackbaud®

- 4.6K Education Management Solutions for K-12 Schools

- Blackbaud Luminate Online & Blackbaud TeamRaiser

- 16.4K Blackbaud Raiser's Edge NXT®

- 4.1K SKY Developer

- 547 ResearchPoint™

- 151 Blackbaud Tuition Management™

- 61 everydayhero

- 3 Campaign Ideas

- 58 General Discussion

- 115 Blackbaud ID

- 87 K-12 Blackbaud ID

- 6 Admin Console

- 949 Organizational Best Practices

- 353 The Tap (Just for Fun)

- 235 Blackbaud Community Feedback Forum

- 55 Admissions Event Management EAP

- 18 MobilePay Terminal + BBID Canada EAP

- 36 EAP for New Email Campaigns Experience in Blackbaud Luminate Online®

- 109 EAP for 360 Student Profile in Blackbaud Student Information System

- 41 EAP for Assessment Builder in Blackbaud Learning Management System™

- 9 Technical Preview for SKY API for Blackbaud CRM™ and Blackbaud Altru®

- 55 Community Advisory Group

- 46 Blackbaud Community Ideas

- 26 Blackbaud Community Challenges

- 7 Security Testing Forum

- 3 Blackbaud Staff Discussions

- 1 Blackbaud Partners Discussions

- 1 Blackbaud Giving Search™

- 35 EAP Student Assignment Details and Assignment Center

- 39 EAP Core - Roles and Tasks

- 59 Blackbaud Community All-Stars Discussions

- 20 Blackbaud Raiser's Edge NXT® Online Giving EAP

- Diocesan Blackbaud Raiser’s Edge NXT® User’s Group

- 2 Blackbaud Consultant’s Community

- 43 End of Term Grade Entry EAP

- 92 EAP for Query in Blackbaud Raiser's Edge NXT®

- 38 Standard Reports for Blackbaud Raiser's Edge NXT® EAP

- 12 Payments Assistant for Blackbaud Financial Edge NXT® EAP

- 6 Ask an All Star (Austen Brown)

- 8 Ask an All-Star Alex Wong (Blackbaud Raiser's Edge NXT®)

- 1 Ask an All-Star Alex Wong (Blackbaud Financial Edge NXT®)

- 6 Ask an All-Star (Christine Robertson)

- 21 Ask an Expert (Anthony Gallo)

- Blackbaud Francophone Group

- 22 Ask an Expert (David Springer)

- 4 Raiser's Edge NXT PowerUp Challenge #1 (Query)

- 6 Ask an All-Star Sunshine Reinken Watson and Carlene Johnson

- 4 Raiser's Edge NXT PowerUp Challenge: Events

- 14 Ask an All-Star (Elizabeth Johnson)

- 7 Ask an Expert (Stephen Churchill)

- 2025 ARCHIVED FORUM POSTS

- 322 ARCHIVED | Financial Edge® Tips and Tricks

- 164 ARCHIVED | Raiser's Edge® Blog

- 300 ARCHIVED | Raiser's Edge® Blog

- 441 ARCHIVED | Blackbaud Altru® Tips and Tricks

- 66 ARCHIVED | Blackbaud NetCommunity™ Blog

- 211 ARCHIVED | Blackbaud Target Analytics® Tips and Tricks

- 47 Blackbaud CRM Higher Ed Product Advisory Group (HE PAG)

- Luminate CRM DC Users Group

- 225 ARCHIVED | Blackbaud eTapestry® Tips and Tricks

- 1 Blackbaud eTapestry® Know How Blog

- 19 Blackbaud CRM Product Advisory Group (BBCRM PAG)

- 1 Blackbaud K-12 Education Solutions™ Blog

- 280 ARCHIVED | Mixed Community Announcements

- 3 ARCHIVED | Blackbaud Corporations™ & Blackbaud Foundations™ Hosting Status

- 1 npEngage

- 24 ARCHIVED | K-12 Announcements

- 15 ARCHIVED | FIMS Host*Net Hosting Status

- 23 ARCHIVED | Blackbaud Outcomes & Online Applications (IGAM) Hosting Status

- 22 ARCHIVED | Blackbaud DonorCentral Hosting Status

- 14 ARCHIVED | Blackbaud Grantmaking™ UK Hosting Status

- 117 ARCHIVED | Blackbaud CRM™ and Blackbaud Internet Solutions™ Announcements

- 50 Blackbaud NetCommunity™ Blog

- 169 ARCHIVED | Blackbaud Grantmaking™ Tips and Tricks

- Advocacy DC Users Group

- 718 Community News

- Blackbaud Altru® Hosting Status

- 104 ARCHIVED | Member Spotlight

- 145 ARCHIVED | Hosting Blog

- 149 JustGiving® from Blackbaud® Blog

- 97 ARCHIVED | bbcon® Blogs

- 19 ARCHIVED | Blackbaud Luminate CRM™ Announcements

- 161 Luminate Advocacy News

- 187 Organizational Best Practices Blog

- 67 everydayhero Blog

- 52 Blackbaud SKY® Reporting Announcements

- 17 ARCHIVED | Blackbaud SKY® Reporting for K-12 Announcements

- 3 Luminate Online Product Advisory Group (LO PAG)

- 81 ARCHIVED | JustGiving® from Blackbaud® Tips and Tricks

- 1 ARCHIVED | K-12 Conference Blog

- Blackbaud Church Management™ Announcements

- ARCHIVED | Blackbaud Award Management™ and Blackbaud Stewardship Management™ Announcements

- 1 Blackbaud Peer-to-Peer Fundraising™, Powered by JustGiving® Blogs

- 39 Tips, Tricks, and Timesavers!

- 56 Blackbaud Church Management™ Resources

- 154 Blackbaud Church Management™ Announcements

- 1 ARCHIVED | Blackbaud Church Management™ Tips and Tricks

- 11 ARCHIVED | Blackbaud Higher Education Solutions™ Announcements

- 7 ARCHIVED | Blackbaud Guided Fundraising™ Blog

- 2 Blackbaud Fundraiser Performance Management™ Blog

- 9 Foundations Events and Content

- 14 ARCHIVED | Blog Posts

- 2 ARCHIVED | Blackbaud FIMS™ Announcement and Tips

- 59 Blackbaud Partner Announcements

- 10 ARCHIVED | Blackbaud Impact Edge™ EAP Blogs

- 1 Community Help Blogs

- Diocesan Blackbaud Raiser’s Edge NXT® Users' Group

- Blackbaud Consultant’s Community

- Blackbaud Francophone Group

- 1 BLOG ARCHIVE CATEGORY

- Blackbaud Community™ Discussions

- 8.3K Blackbaud Luminate Online® & Blackbaud TeamRaiser® Discussions

- 5.7K Jobs Board