Nightly resync of constituent data with another system, using Power Automate?

Hello!

We currently have a process where we export constituent data from Raiser's Edge 7 (DB View) using the Export functionality, run transformations on the exported csv, and then import it into another system. There are only about 6 columns of this data that we pull from the constituent records. Alot about this process is currently pretty manual.

I'd like to be able to change this process to where we schedule a Power Automate flow that does this pull every night, does the transformations, and then, using the other system's REST API, run an import (add or update) of the transformed data into the other system.

My problem is that we currently have over 150k constituent records that I'd be pulling and grabbing these columns from. Isn't there a 5000 operation limit per flow? I haven't tried to implement this yet, so perhaps I'm not thinking about it correctly, but if I'm pulling records from the main constituent data table and then adding a column from one of the constituent custom fields, isn't that a single operation for each row? If so, I imagine that I'd hit the limit long before I can finish. Is this the right way to do this? Is anyone else doing anything similar?

Comments

-

Hello!

We currently have a process where we export constituent data from Raiser's Edge 7 (DB View) using the Export functionality, run transformations on the exported csv, and then import it into another system. There are only about 6 columns of this data that we pull from the constituent records. Alot about this process is currently pretty manual.

I'd like to be able to change this process to where we schedule a Power Automate flow that does this pull every night, does the transformations, and then, using the other system's REST API, run an import (add or update) of the transformed data into the other system.

My problem is that we currently have over 150k constituent records that I'd be pulling and grabbing these columns from. Isn't there a 5000 operation limit per flow? I haven't tried to implement this yet, so perhaps I'm not thinking about it correctly, but if I'm pulling records from the main constituent data table and then adding a column from one of the constituent custom fields, isn't that a single operation for each row? If so, I imagine that I'd hit the limit long before I can finish. Is this the right way to do this? Is anyone else doing anything similar?

Hey Ramon,

Do you need to pull all 150K records each night? One way to minimize loops, etc is by filtering on those last modified “yesterday” (assuming you're running it ofter midnight).

1 -

@Ramon Carroll

Hi, it's possible to do SKY API on your process (MOST likely). It depends on what field of data you trying to get. For example: Request no email and No valid address is not available on Constituent List SKY API endpoint.We have close to 500K constituent records and 1.5M gift records and is able to run data warehouse on all the records every 4 hours, this is possible using “iterative sync" on some of the larger data, however you will have to consider “deletion”.

Yes, there is a 5000 limit per api call, so you have to loop and call multiple times, 150k record is 30 loops.

if you only do nightly sync and 150K record, you should be fine doing a full sync nightly so you don't have to worry about deleted record.

Constituent Custom field is a different api call, so you will have to make additional call. the data will have “parent id” meaning the constituent record id of the constituent, which you can then use “join” and transformation in whatever tool you use.

1 -

@Glen Hutson

you can record the “next_link” on the list constituent API somewhere, and it will always give you what has changed since the last api call.however, issue is with deleted, you will not know what has been deleted.

You can register a “constituent delete webhook” and delete the constituent record off whereever you are persisting the data. not hard, not too easy neither.

0 -

@Ramon Carroll

Oh, just want to clarify a question you were asking if my original reply wasn't clear.5000 is not “operation limit per flow”. there is a 5000 records returned per API call. So you can have 1 flow, loop though 30x of 5000 records to get your 150K constituent record in 1 flow.

1 -

@Glen Hutson

That's a good question. I guess if I can run one big add/update import the old way, and then set up this flow to start running immediately after that, this should reduce the amount of data being worked with. I don't know why I didn't think of that.Thanks for the insight!

0 -

@Alex Wong

Perfect! This is what I needed to know. I'm so glad I went ahead and asked the question!0 -

@Alex Wong

Yes, you’re right. I clearly misunderstood the meaning of that limit, so thanks for homing in on that one.I haven’t done much data manipulation or ETL in Power Automate, just basic business process workflows. I tend to use Python or Power Query/PBI for ETL, so I’m figuring out how to pull certain fields out of the JSON output (we only need about 6 columns or so), make some formatting changes, and transform certain values into their corresponding unique IDs in the other system. I'm going to bookmark this conversation in case I bump my head against a wall while trying to build this flow. There don’t seem to be many free resources online for doing data manipulation in Power Automate.

0 -

this will depend on how you are persisting the data you get nightly.

I use Azure SQL database, so when calling SKY API to get the 5000 constituent records at a time, I can easily use OPENJSON expression to parse the JSON returned and access specific properties within it and store in my relational database.

In power automate, there is also a SELECT action that's part of the Data connector, which allows you to select specific “property” within a JSON data object, which then you can process into CSV or Excel on cloud storage (SharePoint), which can be use in PBI/Powe Query

0 -

@Alex Wong

Just checking on this one - the next link is a key for the data set being returned. I've always only used it to iterate through all the records when there are more than 5000 to return. How long is that next link valid for, can you use it days or weeks later to get changes since the last check?0 -

@SmartTHING Developers

Hi, I do not know how long nexk_link is good for, I use it every 4 hours to get latest changes since 4 hours ago. Originally API call is with date_modified in parameter for sort key based on last date modified. Question maybe @Ben Wong can answer.0 -

@Alex Wong @SmartTHING Developers I confirmed with the team that the next_link does not expire, so can be used indefinitely.

1 -

@Ben Wong

That's an excellent bit of news. Brilliant.0 -

@SmartTHING Developers

I’ve just located this “next_link” property that you were referring to. Perhaps my imagination isn't working too well at the moment, but would you indulge me in how you are using this property in a Power Automate action to loop through all of the records?I’ve just built a loop in Power Automate that iterates through all of the records. Basically, the same way I would have done it in JavaScript or Python. It works, but it feels a bit wonky to build and maintain these via Power Automate (I have to track several states using variables throughout the loop). Perhaps your use of the “next_link” would be easier?

0 -

@Ramon Carroll Hi Ramon, you need to loop using the next_link URL on each call and end if no records are returned (or less than the page size). Tbh we are using this outside of powerautomate but that approach should work. Cheers. Warren

1 -

@SmartTHING Developers

Okay, I understand now. I’m using Blackbaud’s Power Automate connector, which constructs and performs the API calls for me based on my inputs. The option to use the “next_link” property, even though it’s available in the output, won’t work with my method. I’d have to forgo the use of Blackbaud’s NXT connector and make the API calls directly myself.However, if I were to do that (whether through Power Automate or some other tool, such as a script), it's good to know that the property is available for use. Thank you!

0 -

@Ramon Carroll

You cannot use the Blackbaud connector's action, you need to use the Blackbaud NXT Add-in connector > Send HTTP request action.You can learn how here: https://community.blackbaud.com/forums/viewtopic/586/62295

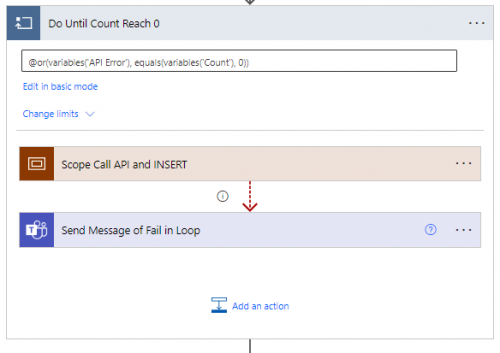

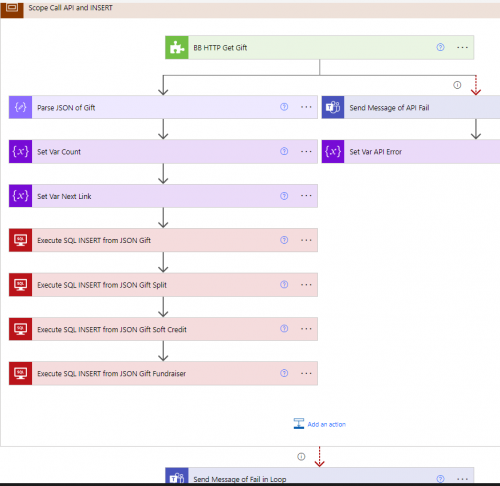

This is the basic idea:

loop until Count from API call is 0 or API Error is true

I persist data in azure sql database, so it's easily for me to do 4 actions without looping each of the 5000 gift records that is returned from 1 API call. Depending on where you are persisting your data, you may need to loop, and if you do have to loop each gift, then your flow will run A LOT longer. 4 actions b/c I am recording each of the 1-many relationships of gift into its own relational table: gift splits, gift soft credit, and gift fundriaser

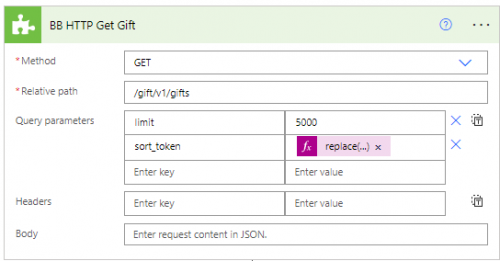

In the BB HTTP Get Gift action, here's what it looks like:

- the difficulty is you can't use next_link directly

- use of relative path

- query parameter cannot be added on the relative path, meaning the sort token must be “parsed” out

here's my expression specifically for gift:

replace(replace(replace(split(variables('Next Link'), '?')[1],'limit=5000',''), '&', ''),'sort_token=','')

As I know my next_link ONLY have 1 other query parameter on it “limit=5000”, so i'm using replace to get rid of it, then the & sign that separate query paramter, then finally get rid of the query parameter field name of sort_token=

good luck

0 - the difficulty is you can't use next_link directly

-

@Alex Wong

Wow, this is very helpful, Wong! I appreciate the pictures too! I'll take a look over it all, and let you know if I have questions.0

Categories

- All Categories

- 6 Blackbaud Community Help

- High Education Program Advisory Group (HE PAG)

- BBCRM PAG Discussions

- Luminate CRM DC Users Group

- DC Luminate CRM Users Group

- Luminate PAG

- 186 bbcon®

- 1.4K Blackbaud Altru®

- 389 Blackbaud Award Management™ and Blackbaud Stewardship Management™

- 1K Blackbaud CRM™ and Blackbaud Internet Solutions™

- 14 donorCentrics®

- 355 Blackbaud eTapestry®

- 2.4K Blackbaud Financial Edge NXT®

- 616 Blackbaud Grantmaking™

- 542 Blackbaud Education Management Solutions for Higher Education

- 33 Blackbaud Impact Edge™

- 3.1K Blackbaud Education Management Solutions for K-12 Schools

- 909 Blackbaud Luminate Online® and Blackbaud TeamRaiser®

- 207 JustGiving® from Blackbaud®

- 6.2K Blackbaud Raiser's Edge NXT®

- 3.5K SKY Developer

- 236 ResearchPoint™

- 116 Blackbaud Tuition Management™

- 375 YourCause® from Blackbaud®

- 160 Organizational Best Practices

- 232 The Tap (Just for Fun)

- 31 Blackbaud Community Challenges

- Blackbaud Consultant’s Community

- 19 PowerUp Challenges

- 3 Raiser's Edge NXT PowerUp Challenge: Gift Management

- 4 Raiser's Edge NXT PowerUp Challenge: Events

- 3 Raiser's Edge NXT PowerUp Challenge: Home Page

- 4 Raiser's Edge NXT PowerUp Challenge: Standard Reports

- 4 Raiser's Edge NXT PowerUp Challenge #1 (Query)

- 71 Blackbaud Community All-Stars Discussions

- 47 Blackbaud CRM Higher Ed Product Advisory Group (HE PAG)

- 743 Community News

- 2.8K Jobs Board

- Community Help Blogs

- 52 Blackbaud SKY® Reporting Announcements

- Blackbaud Consultant’s Community

- 19 Blackbaud CRM Product Advisory Group (BBCRM PAG)

- Blackbaud Francophone Group

- Blackbaud Community™ Discussions

- Blackbaud Francophone Group